Run it live at here.

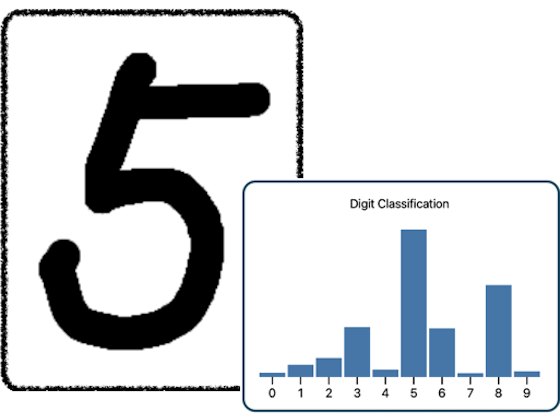

This project tries to classify the handwritten image into the ten digits on the fly as the image is being drawn, using either a mouse or a finger. As you move your mouse or finger to draw on the screen, it shows the classification results in real-time, so that you can see the most likely classification, the second likely, the third, etc.

Internally, it uses a neural-network model with one hidden layer of 512 neurons, and calculates the model output (as known as inference) as soon as it receives a mouse-move or touch-move event. Therefore, to make it possible to run smoothly on even low-end handheld devices, we leverage Tensorflow with Wasm backend, which is backed by XNNPACK. XNNPACK Wasm backend fully leverages CPU's processing power, using CPU multi-threading and Single Instruction Multiple Data (SIMD) technologies.

As usual, we are also planning on a GPU-accelerated version, which is likely to be via WebGPU.

You can find it here.

Back to Home